Semantic Attribution Explanation

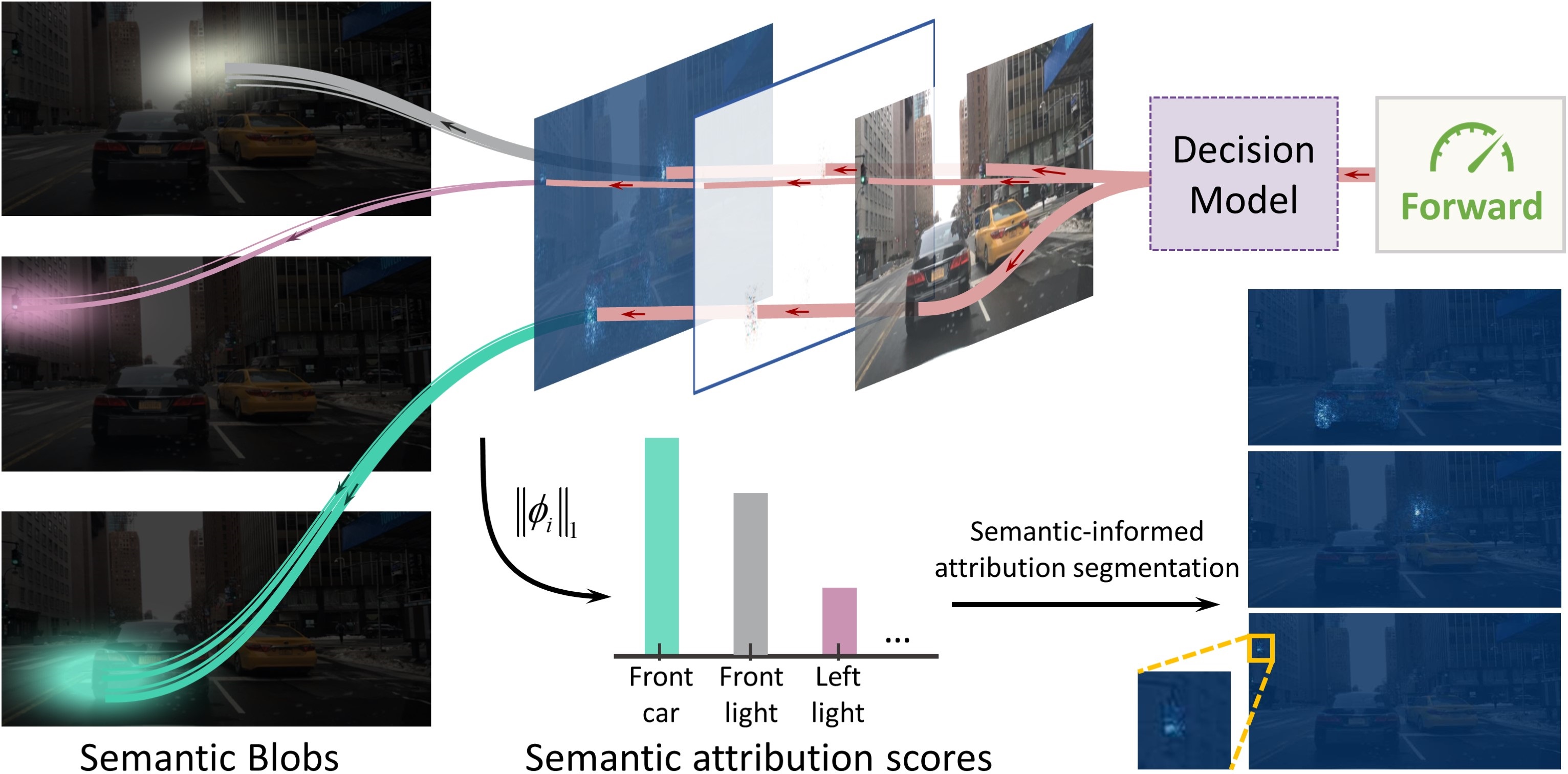

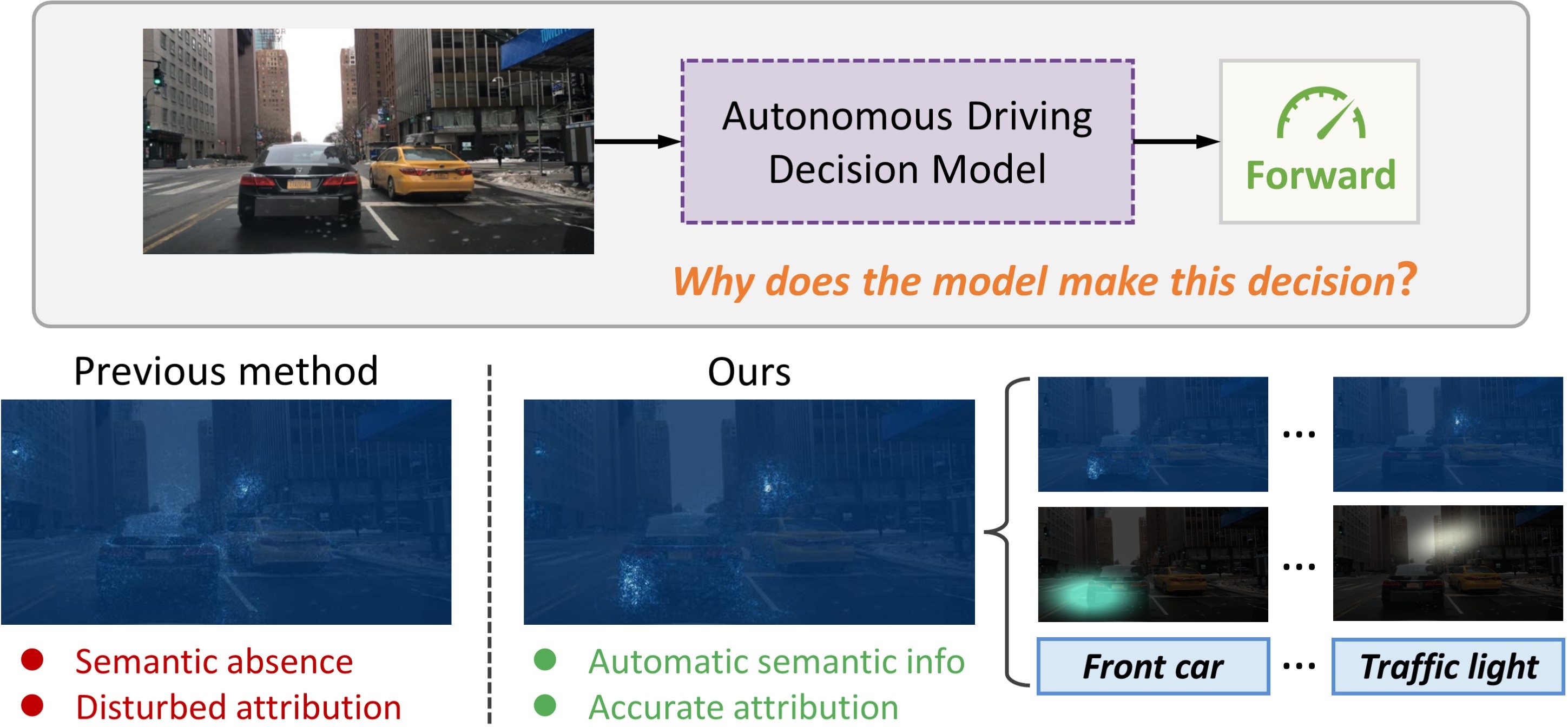

Understanding decision-making in autonomous driving models is essential for real-world applications. Attribution methods are a primary research direction for explaining neural network decisions. However, in the context of autonomous driving, numerical attributions fail to interpret the complex semantic information and often result in explanations that are difficult to understand. This paper introduces a novel semantic attribution approach that both identifies where important features appear and provides intuitive information about what they represent. To establish the semantic correspondences for attributions, we propose an interpreting framework that integrates unsupervised differentiable semantic representations with the attribution computational model. To further enhance the accuracy of the attribution while ensuring strong semantic correspondence, we design a Semantic-Informed Aumann-Shapley (SIAS) method, which defines the integration path using constraints from semantic scores and discrete gradients.

Article

“Understanding Decision-Making of Autonomous Driving via Semantic Attribution.” IEEE Transactions on Intelligent Transportation Systems. Article GitHub Repo