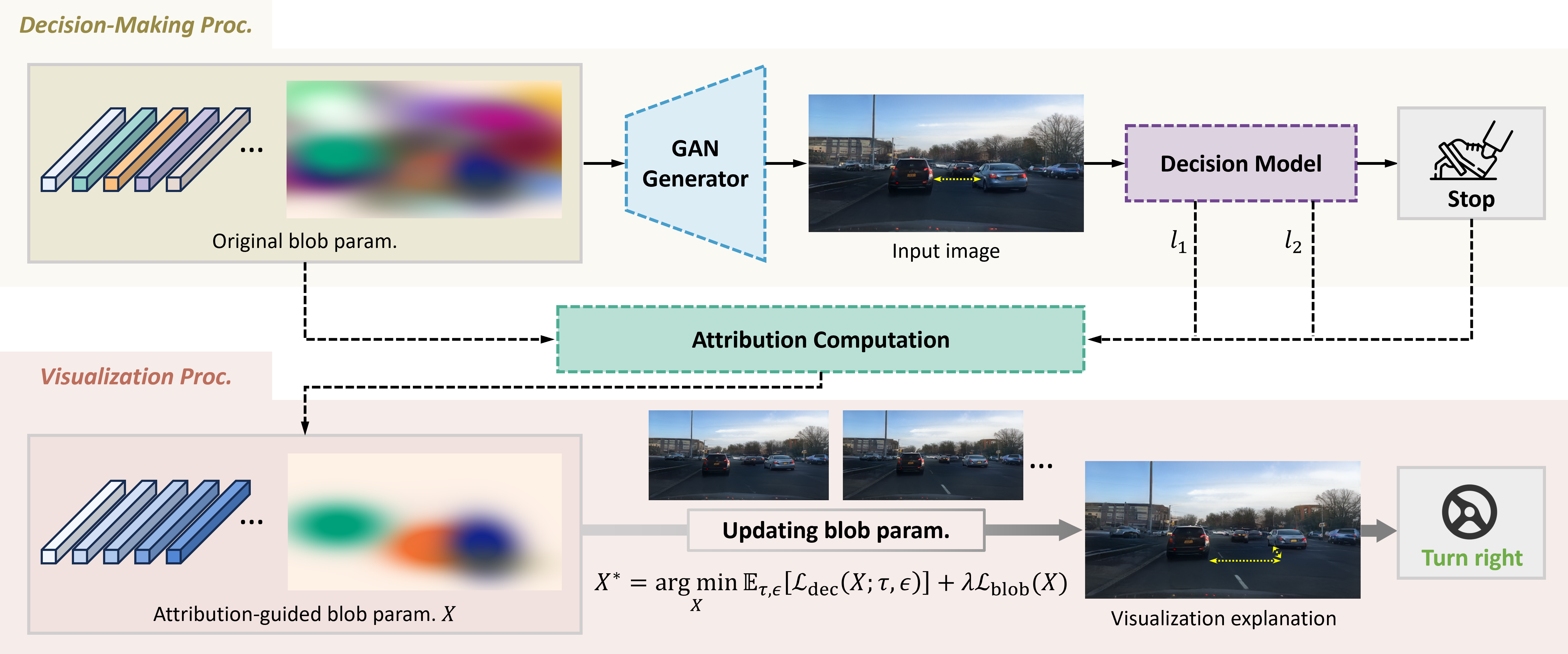

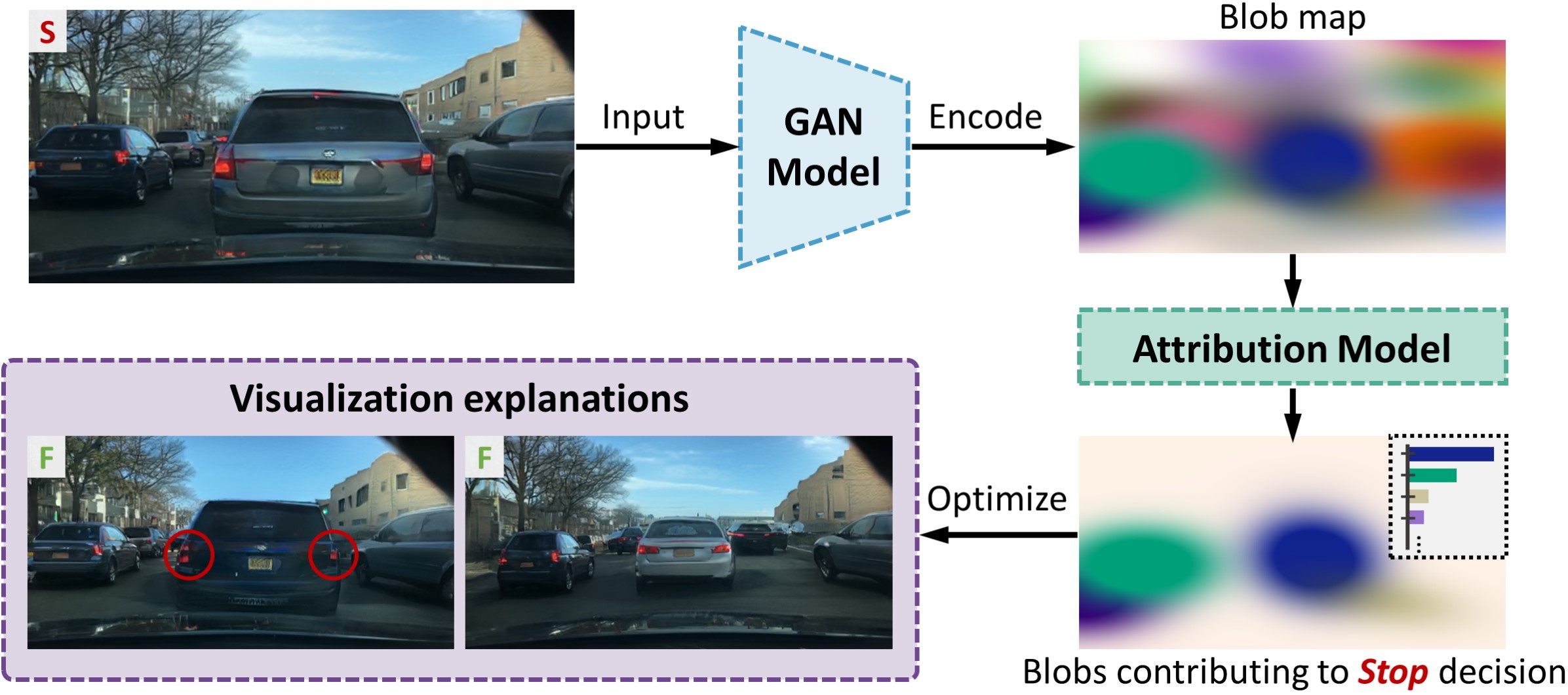

Attribution-Guided Visualization Explanation

Given the critical need for more reliable autonomous driving models, explainability has become a focal point within the research community. In testing autonomous driving models, even slight perception differences can dramatically influence decision-making processes. Understanding the specific reasons why a model decides to stop or keep forward remains a significant challenge. This paper presents a novel attribution-guided visualization method aimed at exploring the triggers behind decision shifts, providing clear insights into the underlying why and why not of such decisions. More specifically, we propose the cumulative layer fusion attribution method that identifies the parameters most critical to decision-making. These attributions then inform the visualization updates, ensuring that changes in decisions are driven only by modifications to critical information. Furthermore, we develop an indirect regularization method that increase visualization quality without necessitating extra hyperparameters. Experiments on large datasets demonstrate that our method produces valuable visualization explanations and outperforms state-of-the-art methods in both qualitative and quantitative evaluations.

Article

“Exploring Decision Shifts in Autonomous Driving with Attribution-Guided Visualization.” Ongoing Work. Article GitHub Repo